Even experienced programmers need a reminder about the cost of system calls.

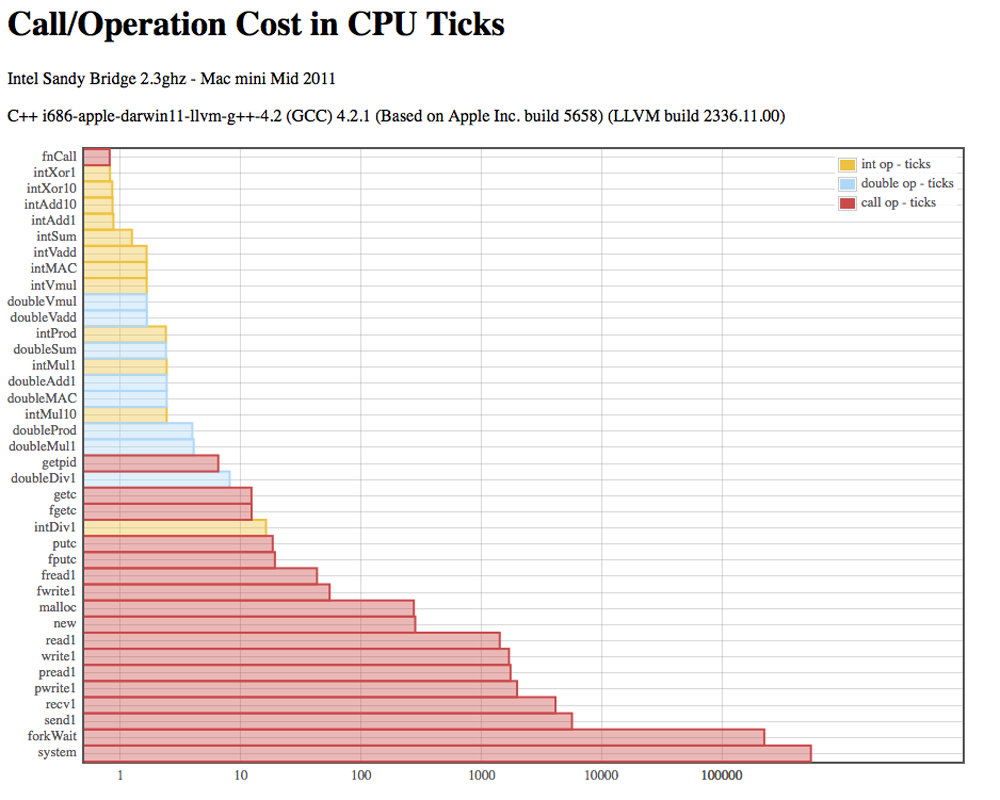

These rounded numbers express the relative cost of operations and calls in CPU ticks measured on Intel Core 2 processors. Please see the graphs below for actual measured numbers.

| Operation | Measured-Rounded |

|---|---|

| branch / loop | 0 |

| intXor | 1 |

| function call | 1 |

| stack object | 2 |

| memcpy 512 bytes | 50 |

| fread | fwrite | 50 |

| malloc + free | 400 |

| new heap object | 400 |

| read | write | 2,500 |

| recv | send | 5,000 |

| fork + wait | system | 300,000 |

Profiling is recommended for performance tuning - see also cachegrind and kcachegrind.

mongo-c-driver - mongo_read_response()

The above sample has several flaws in function mongo_read_response.

Socket timeout are missed, the function continues with the wire protocol out-of-sync, resulting in garbage data, and the users program crashes. If the error code is checked and an error returned from the function, then the user can take action like closing and reconnecting.

Socket calls are expensive, on the order of 5000 CPU ticks as seen in the table below, the most expensive system call that is commonly invoked. In the example above, the first two socket reads can be easily combined into one read, and the data can be copied if needed with close to no cost relative to the socket read cost.

It's most probable that the MongoDB server will send the complete response as a single send. It's most probable that the driver can get the complete response in a single recv. A simple solution would be to do single recv request into a buffer of max_message_size. A more sophisticated solution would be to use elastic buffers. Do a first recv to the current buffer size. From the message header, you now have the length that you need to know, and you can grow the buffer and recv the remainder of the message only if needed.

If the server sends more than one response as a single send and if a single recv gets more than one response, then the next obvious solution is to use stream input/output. Investigate fdopen and remember dup and flush(es) are probably needed, or you can roll your own stream IO. If you write your BSON decoder to use stream IO, you may even avoid intermediate buffers. Multithreading and large bulk 16MB documents introduce complications, but I'm certain that smart people can come up with smart solutions - that's why we are needed.

The cost of socket calls is highly significant. Note that they induce system CPU time and the cost will not be reflected in user CPU time. If you have performance requirements, please start your investigating improvements here!

The sample function forces a malloc on each call. While malloc/free is not as expensive as an IO system call, it is next with a cost of around 400 CPU ticks. Many-many programs are dominated by malloc overhead for user CPU time.

This is an unbalanced malloc which is problematic. A standard practice for correctness is to have the malloc and its matching free co-located in the same function or module to avoid memory allocation problems like memory leaks. This sample function forces the user to have a call to free this memory.

A better solution would be to not malloc internally supply buffer resources to the function call. This has the possibility of zero malloc overhead per response-read call. The buffers can be pooled and allocated once at startup or perhaps elastically on demand. This essentially eliminates malloc overhead for the buffers needed for reading a response.

Comparison Table - includes all processors measured above.

Sandy Bridge - Instruction Decode and uop Cache - for some clue about sub-CPU-tick measurements due to micro-ops.